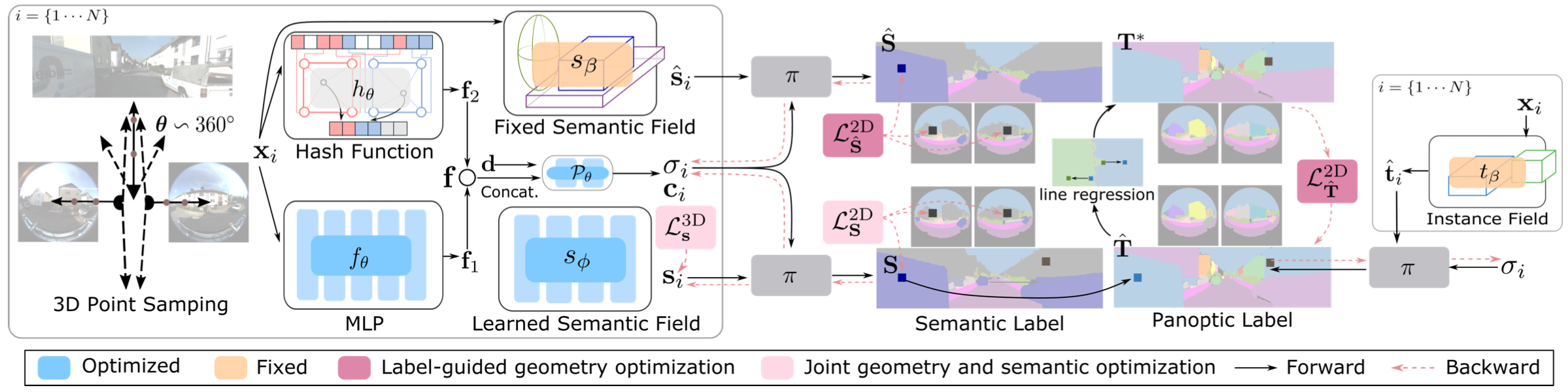

Overall Framework

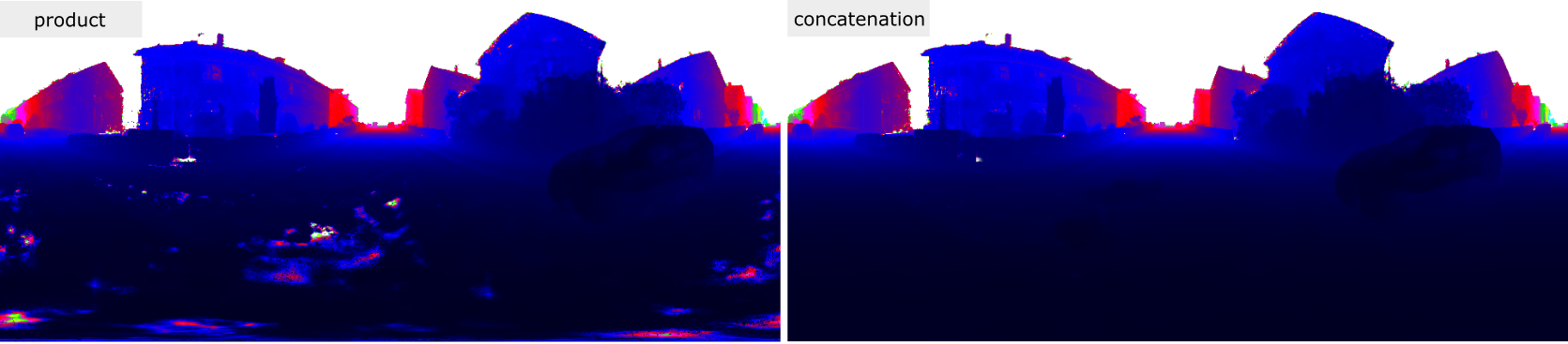

At each 3D location, we combine scene features obtained from a deep MLP and multi-resolution hash grids to jointly model geometry, appearance and semantics. We leverage dual semantic fields to obtain two semantic categorical logits. Our method allows for rendering panoptic labels by combining the learned semantic field and a fixed instance field determined by the 3D bounding primitives. The losses applied to fixed semantic and instance field improve the geometry. The learned semantic loss serves to improve the 3D semantic predictions, which allows for resolving the label ambiguity at the bbox intersection regions.